The Unfulfilled Promise of the Quality Movement

Monday, January 20, 2014 at 9:05AM

Monday, January 20, 2014 at 9:05AM Richard A. Robbins, MD

Phoenix Pulmonary and Critical Care Research and Education Foundation

Gilbert, AZ

Abstract

In the latter half of the 20th century efforts to improve medical care became known as the quality movement. Although these efforts were often touted as “evidence-based”, the evidence was often weak or nonexistent. We review the history of the quality movement. Although patient-centered outcomes were initially examined, these were replaced with surrogate markers. Many of the surrogate markers were weakly or non-evidence based interventions. Furthermore, the surrogate markers were often “bundled”, some evidence-based and some not. These guidelines, surrogate markers and bundles were rarely subjected to beta testing, and when carefully scrutinized, rarely correlated with improved patient-centered outcomes. Based on this lack of improvement in outcomes, the quality movement has not improved healthcare. Furthermore, the quality movement will not likely improve clinical performance until recommended or required interventions are tested using randomized trials.

Introduction

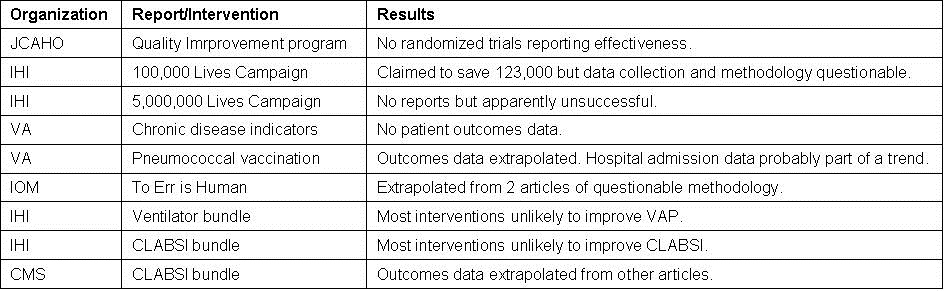

The quality movement has been touted as improving patient care at lower costs. However, there are very little data available that “clinically meaningful” outcomes have improved as a result of the quality movement. This manuscript will examine some of the major quality improvement efforts (Table 1).

Table 1. Major quality programs examined in this manuscript.

In addition, the manuscript will point out that some of the key issues with quality improvement measures particularly relevant to pulmonary/critical care physicians such as pneumococcal vaccination in adults, ventilator-associated pneumonia (VAP) and central line associated bloodstream infection (CLABSI) bundles.

Early History of the Quality Movement

Origins of the quality-of-care movement can be traced back to the nineteenth century. An early study assessing the efficacy of hospital care was the work of the British nurse, Florence Nightingale (1). She reported that the hospital in Scutari during the Crimean War had an exceedingly high mortality rate.

In 1910, Flexner issued a critical report of the U.S. medical educational system and called for major reforms. In addition to reforms in education, the report called for full time faculty who held appointments in a teaching hospital with adequate space and equipment (2). Shortly after the Flexner report, Codman developed the medical audit, a process for evaluating the overall practice of a physician including the outcomes of surgery (3).

A survey conducted by the American College of Surgeons of 700 hospitals in 1919 concluded that few were equipped to provide patients with even a minimal level of quality of care. The College went on to establish a program of minimum hospital standards (4). Later the program was transferred to the Joint Commission on Accreditation of Hospitals (now the JCAHO or Joint Commission). However, the Joint Commission was largely ineffective until 1965. At that time, the Joint Commission became one of the most powerful accrediting groups through its role in certifying eligibility for receipt of federal funds from Medicare and Medicaid.

As the role of government in paying for medical care has grown, so has the demand for assurance of the quality of the healthcare services. In the mid-1960s, utilization review activities were required to receive reimbursement for in-patient services from Medicare and Medicaid. Despite utilization review, increasing concern for accountability in healthcare arose from two sources. One was the consumer movement (5). This was fueled by continual reports of variation in services offered by different physicians and by different health care institutions. Usually the reports implied or stated that the care was substandard. The second was a dramatic increase in medical malpractice suits further eroding the public confidence in the medical profession.

The most common definition of quality of care used in the later twentieth century was authored by Donabedian in 1966 (6). He identified three major foci for the evaluation of quality of care outcome, process and structure. Outcome referred to the condition of the patient and the effectiveness of healthcare including traditional outcome measures such as morbidity, mortality, length of stay, readmission, etc. Process of care represented an alternative approach which examined the process of care itself rather than its outcomes. These processes are often referred to as surrogate markers. The structural approach involved examining the physical aspects of health care, including buildings, equipment, and supplies.

Joint Commission (JCAHO)

The structural approach was often emphasized in the Joint Commission surveys in the 1960’s and 70’s. Beginning in the 1970’s the Joint Commission began to address outcomes by requiring hospitals to perform medical audits. However, the Joint Commission soon realized that the audit was “tedious, costly and nonproductive” (7). Efforts to meet audit requirements were too frequently “a matter of paper compliance, with heavy emphasis on data collection and few results that can be used for follow-up activities. In the shuffle of paperwork, hospitals often lost sight of the purpose of the evaluation study and, most important, whether change or improvement occurred as a result of audit”. Furthermore, survey findings and research indicated that patient care and clinical performance had not improved (7).

In response to the ineffectiveness of the audit and the call to improve healthcare, the Joint Commission introduced a new quality assurance standard in 1980. The standard consisted of five elements:

- The integration or coordination of all quality assurance activities into a comprehensive program;

- A written plan for the program;

- A problem-focused approach to review;

- Annual reassessment of the program;

- Measurable improvement in patient care or clinical performance.

Hospitals complied with most aspects of these five elements. Over 90% had a written plan, annual reassessment, and integration of the quality assurance activities under the hospital administration by mid-1982 (8). However, the other elements remained largely ignored. Physician involvement was often perfunctory either because of their reluctance to be involved or because of hospital administration reluctance to have them involved. Hospital boards and administrators had little idea of which problems were most important and little idea of how to proceed with evaluation and interpretation of the results. Given these limitations it is not surprising that data demonstrating measurable improvement in patient care was lacking.

A number of superficial name changes occurred over the next few years. These included quality improvement, total quality improvement, risk management, quality management and total quality improvement. Although each was touted as an improvement in quality assurance, none were fundamentally different than the original concept and none demonstrated a convincing improvement in any patient-centered outcomes.

Institute of Healthcare Improvement (IHI)

Recognizing weaknesses in the Joint Commission processes, private organizations attempted to develop programs that enhanced quality. One was the Institute for Healthcare Improvement (IHI). Founded by Donald Berwick in 1989, IHI was quite successful in attracting funding from a number of charitable organizations such as Kaiser Permanente Community Benefit, the Josiah Macy, Jr. Foundation, the Rx Foundation,the MacArthur Foundation, the Robert Wood Johnson Foundation, the Bill & Melinda Gates Foundation, the Health Foundation, and the Izumi Foundation. Additional funding was obtained from insurance companies such as the Blue Cross and Blue Shield Association, the Cardinal Health Foundation, the Aetna Foundation, the Blue Shield of California Foundation. Some pharmaceutical funding was also obtained from Baxter International, Inc. and the Abbott Fund. In addition, through the IHI “Open School” many hospitals, both academic and private, supported IHI activities. These included the Mayo Clinic, Banner Good Samaritan Medical Center, St. Joseph's Hospital and Medical Center, the University of Arizona Medical Center, the University of Colorado at Denver, and University of New Mexico - Albuquerque (9).

Under Berwick’s leadership, IHI launched a number of proposals to improve healthcare. A noteworthy initiative from the IHI was the 18 month 100,000 Lives Campaign which began in January 2005. This campaign encouraged hospitals to adopt six best practices to reduce harm and deaths. The interventions included deployment of rapid response reams, a medication reconciliation process, interventions for acute myocardial infarction, a central line management process, administering antibiotics at a specific time to prevent surgical site infections, and using a ventilator protocol to minimize ventilator associated-pneumonia. Review of the evidence basis for at least 3 of these interventions reveals fundamental flaws. A large cluster-randomized, controlled trial demonstrated that medical response teams greatly increased medical response team calling, but did not substantially affect the incidence of cardiac arrest, unplanned ICU admissions, or unexpected death (10). Furthermore, the interventions to prevent central line infections and ventilator-associated pneumonia were either non- or weakly evidence-based and unlikely to improve patient outcomes (11,12)

Despite these limitations, IHI announced in June 2006 that the campaign prevented 122,300 avoidable deaths (13). Interestingly, the methodology and sloppy estimation of the number of lives saved were pointed out by Wachter and Pronovost (14), in the Joint Commission’s Journal of Quality and Safety. IHI failed to adjust their estimates of lives saved for case-mix which accounted for nearly three out of four "lives saved." The actual mortality data were supplied to the IHI by hospitals without audit, and 14% of the hospitals submitted no data at all. Moreover, the reports from even those hospitals that did submit data were usually incomplete. The most striking example of this is the fact that the IHI announcement of lives saved in 18 months was based on submissions through March, not June, 2006, accounting for only 15 months. The final three months were also extrapolated from hospitals’ previous submissions. Although not reported by IHI, it seems likely that there were even more missing data beyond that described above. One important confounder is the fact that the campaign took place against a background of declining inpatient mortality rates (14).

Whether this decline was a result of some of the quality improvement efforts promoted by IHI and others or other factors is unclear. Undeterred, the IHI proceeded with the 5,000,000 Lives Campaign claiming that over 80% of US hospitals were participants (15). However, this campaign ended in 2008 and was apparently not successful (16). Although IHI promised to publish results in major medical journals, a literature search revealed no published outcomes.

Department of Veterans Affairs (VA)

The Department of Veterans Affairs (VA) has played a pivotal role in the quality movement. Although VA hospitals have been required to be Joint Commission approved since the Regan Administration, the quality movement began with the appointment of Kenneth W. Kizer as the VA's undersecretary of health in 1994. An emergency room physician, Kizer was Director of California Emergency Medical Services, Chief of Public Health for California and Director of the California Department of Health Services before coming to the VA (18).

Kizer mandated several interventions. One was the installation and utilization of an electronic healthcare record. The second was a set of performance measures which became known as the chronic disease indicators (19). In order to encourage performance of these interventions, Kizer initiated pay-for-performance, not to the doctors and nurses doing the interventions, but to the top administration of the hospital. The focus changed from meeting the needs of the patient to meeting the performance measures so the administrators could receive their bonuses. From 1994 to 2000 nearly all the performance measures improved. Three improved dramatically-pneumococcal vaccination, annual measurement of hemoglobin A1C, and smoking cessation (19). However, the evidence basis that these interventions improved patient outcomes was questionable (20). Furthermore, there was no outcome data such as morbidity, mortality, admission rates, length of stay, etc. that supported the contention that the health of veterans improved.

Although politics forced Kizer’s resignation in 1999, he was followed by his deputy, Thomas L. Garthwaite and eventually by his Chief Quality and Performance Officer, Jonathan B. Perlin. Perlin realized that outcome data was needed and promised that this would be forthcoming. On August 11, 2003 at the First Annual VA Preventive Medicine Training Conference in Albuquerque, NM, Perlin claimed that the increase in pneumococcal vaccination saved 3914 lives between 1996 and 1998 (21) (For a copy of the slides used by Perlin click here). Furthermore, he claimed pneumococcal vaccination resulted in 8000 fewer admissions and 9500 fewer days of bed care between 1999 and 2001. However, this data was not measured but based on extrapolation from a single, non-randomized, observational study (22). Although no randomized study examining patient outcomes with the 23-polyvalent pneumococcal vaccine has been performed, other studies do not support the efficacy of the vaccine in adults (23-25). Furthermore, there was an overall downward trend in hospital admissions. The reduction in hospital admissions for pneumonia appeared to be nothing more than part of this trend since the number of outpatient visits for pneumonia increased (26).

Institute of Medicine (IOM)

The Institute of Medicine (IOM) is a non-profit, non-governmental organization founded in 1970, under the congressional charter of the National Academy of Sciences (27). Its purpose is to provide national advice on issues relating to biomedical science, medicine, and health, and its mission to serve as adviser to the nation to improve health.

The IOM attracted little attention until publication of “To Err is Human” in 1999 (28). This report was authored by the IOM’s committee on quality of health care in America whose members included Donald Berwick from the Institute of Healthcare Improvement and Mark Chassin, now president and chief executive officer of the Joint Commission. The report estimated that 44,000 to 98,000 deaths occur annually in the US due to medical errors. It was presented with drama and an assertion of lack of previous attention. This was followed by a plea to the medical profession to remember its promise to "do no harm" and that "at a very minimum, the health system needs to offer that assurance and security to the public." The clear implication was that doctors were killing their patients at a terrible rate and needed oversight and direction. The Clinton administration clearly heard this message and issued an executive order instructing government agencies that conduct or oversee health-care programs to implement proven techniques for reducing medical errors, and creating a task force to find new strategies for reducing errors. Congress soon launched a series of hearings on patient safety, and in December 2000 it appropriated $50 million to the Agency for Healthcare Research and Quality to support a variety of efforts targeted at reducing medical errors.

Examination of the two studies on which the IOM based their mortality estimates both came from the Harvard School of Public Health where Harvey Fineberg, president of the IOM, had been dean. The higher estimate was based on a 1991 study published in the New England Journal of Medicine (29). In this study, 30,121 medical records from 51 randomly selected acute care hospitals in New York State were reviewed. Population estimates of injuries were made. To do this the records were screened by trained nurses and medical-records analysts; if a record was screened as positive for a potentially adverse event, two physicians independently reviewed the record. The second study on which the lower mortality estimate was made was published in Medical Care in 2000 (30). The same group from the Harvard School of Public Health used a similar strategy and examined 15,000 records from acute care hospitals in Utah and Colorado from 1992.

Although examples were given of what constituted an adverse event and whether it was due to negligence, it is “difficult to judge whether a standard of care has been met” leading to a “relatively low level of reliability…” according to the first article. In both articles, negligence remained undefined which ultimately means that the determination of negligence relied on judgment. Unexplained is why the second article showed deaths due to negligence in Utah and Colorado at half the rate of New York.

Hofer et al. (31) examined medical errors in large part as a response to “To Err is Human” and the Harvard School of Public Health studies on which the IOM based their mortality estimates. They made four principal observations. “First, errors have been defined in terms of failed processes without any link to subsequent harm. Second, only a few studies have actually measured errors, and these have not described the reliability of the measurement. Third, no studies directly examine the relationship between errors and adverse events. Fourth, the value of pursuing latent system errors (a concept pertaining to small, often trivial structure and process problems that interact in complex ways to produce catastrophe) using case studies or root cause analysis has not been demonstrated in either the medical or nonmedical literature”.

Patient Safety

The IOM report, “To Err is Human”, resulted in yet another name change for the quality movement, the patient safety movement. An article in JAMA by Leape, Berwick and Bates (32) in 2005 entitled “What Practices Will Most Improve Safety? Evidence-Based Medicine Meets Patient Safety” examined what the authors considered evidence-based practices that might improve patient safety. They examined patient safety targets and listed patient safety practices to reduce or eliminate any adverse outcomes. The evidence of each practice was graded greatest, high, medium, low and lowest. Unfortunately, mistakes were made and some would disagree with the strength of evidence. For example, the ventilator bundle from 2011 used by IHI lists 5 interventions:

- Elevation of the Head of the Bed

- Daily "Sedation Vacations" and Assessment of Readiness to Extubate

- Peptic Ulcer Disease Prophylaxis

- Deep Venous Thrombosis Prophylaxis

- Daily Oral Care with Chlorhexidine

However, in their JAMA article the intervention with the greatest evidence for prevention of ventilator-associated pneumonia was continuous aspiration of subglottic secretions. Semirecumbent positioning and elective decontamination of the digestive tract were listed as having a high strength of evidence. Continuous oscillation was listed as medium strength evidence. Use of sucralfate was listed as lowest strength of evidence. Sedation vacations and oral care with chlorhexidine were not listed. Deep venous prophylaxis was listed as prevention of venous thromboembolism, which it clearly does but does not reduce mortality or prevent pneumonia (33). Peptic ulcer disease prophylaxis with H2 antagonists was listed as medium strength of evidence. The possibility that H2 antagonists might increase pneumonia rather than prevent it was not raised (34).

Similarly, practices listed in the 2005 JAMA article to prevent central line-associated bloodstream infection listed antibiotic-impregnated catheters (greatest strength of evidence); chlorhexidine, heparin and catheter tunneling (lower strength of evidence); and routine antibiotic prophylaxis and changing catheters routinely (lowest strength of evidence). However, the IHI central line bundle include:

- Hand Hygiene

- Maximal Barrier Precautions Upon Insertion

- Chlorhexidine Skin Antisepsis

- Optimal Catheter Site Selection, with Avoidance of the Femoral Vein for Central Venous Access in Adult Patients

- Daily Review of Line Necessity with Prompt Removal of Unnecessary Lines

Although disagreement about the level of evidence may be appropriate, the IHI bundles are clearly discordant with the evidence basis of the interventions as listed in the JAMA article. Furthermore, IHI mixed practices with various levels of evidence into a single bundle and encouraged the performance of all in order to receive “credit” for compliance with the bundle.

Department of Health and Human Services (HHS)

When Berwick became director of HHS’ Center for Medicare and Medicaid Services (CMS) the IHI’s bundles moved with him. With the Agency for Healthcare Quality and Research (AHRQ), another division of HHS, CMS initiated a vigorous program to prevent hospital-acquired infections backed up with financial penalties for noncompliance. This included bundles mixing practices with various levels of evidence (or no evidence) and requiring compliance with all to receive “credit”, or in this case, avoid financial penalties for noncompliance. CMS referred to this as “value-based performance”.

In an increasingly familiar scenario, AHRQ issued a press release on September 20, 2012 touting the remarkable success of the program to reduce central line-associated bloodstream infections (CLABSIs) (35). The program “…reduced the rate [of CLABSI] … in intensive care units by 40 percent…saving more than 500 lives and avoiding more than $34 million in health care costs” (35). Although the methodology used to determine these numbers was not stated, it seems likely that it followed the model of IHI’s 100,000 Lives Campaign. A program was initiated based on a dubious intervention(s)-in this case a 2 page checklist for central line insertion (36). Data on the incidence of infection was determined by billing, another form of self-reporting. The dollars and lives saved were then extrapolated from other publications.

There are several problems with this approach. First, Meddings et al. (37) determined that data on self reported urinary tract infections were “inaccurate” and “are not valid data sets for comparing hospital acquired catheter-associated urinary tract infection rates for the purpose of public reporting or imposing financial incentives or penalties”. The authors proposed that the nonpayment by Medicare for “reasonably preventable” hospital-acquired complications resulted in this discrepancy. There is no reason to assume that data reported for CLABSI is any more accurate. Further evidence comes from the observation that compliance with most of these bundles does not appear to correlate with outcomes such as mortality or readmission rates (38).

Second, choosing a single article which does not represent the overall body of evidence can be deceiving. In the pneumococcal vaccine example cited earlier, Perlin chose the only article to show a beneficial effect of the 23-polyvalent pneumococcal vaccine in adults (22). Most reviews and meta-analyses do not support the vaccine’s efficacy (23-25).

Third, many of the articles used for calculation of the mortality and cost savings are not risk adjusted. It may seem obvious, but saying that CLABSIs are associated with higher mortality and costs is not the same as saying the mortality and costs were caused by the CLABSI. Central lines are placed in sicker, more unstable patients and their underlying disease and not the CALBSI could account for the higher mortality and costs. These extrapolated conclusions are not the same as measurement of the mortality and costs in carefully planned and controlled randomized trials.

Discussion

Review of the quality movement reveals a dizzying array of pseudo-regulatory organizations and ever-changing programs and guidelines. The National Guidelines Clearinghouse lists in excess of 15,000 guidelines (39). This explosion of directives appears to undergo little to no oversight with no checks to ensure that these guidelines are evidence-based.

This manuscript reviewed some of the more prominent programs to improve healthcare and have found them sadly wanting (Table 1). Overall the science has been poor and evidence of improvement in patient outcomes lacking. It is unclear if the present programs have improved on the tedious, costly and nonproductive medical audits of the 1970’s (7). Like the audits, present quality programs appear to be more a matter of paper compliance. In the shuffle of paperwork, hospitals and regulatory agencies seem to have lost sight of that the purpose is to improve healthcare and not fulfill a political or financial goal.

As hospitals struggle to decrease complication rates in order to receive better reimbursement for “value-based performance” several likely strategies may evolve. One is to lie about the data. According to Meddings (37) this is apparently happening with urinary tract infections and clearly was happening with ventilator-associated pneumonia (12). The accuracy of the data submitted by a hospital’s quality manager, under intense scrutiny from the CEO or board to demonstrate the hospital’s success in quality improvement, is rarely questioned if it shows an improvement. This may be particularly true now that many CEOs and managers are operating under incentive systems that tie bonuses to quality performance (14). Tying hospital reimbursement may induce similar discrepancies. Another rather obvious strategy to increase reimbursement would be to prevent complications by not performing interventions such as urinary tract catheters or central lines. Whether this is happening is not clear but seems likely. It is also unclear whether this will be beneficial or harmful to patients.

It is difficult to argue that a complication might be good for a patient. However, some of the hospital-acquired infections and readmission rates correlate with improved mortality (38). The reason for this is not clear but could represent a minor complication of what are best practices that benefit the majority of patients.

According to Patrick Conway, CMS Chief Medical Officer and Deputy and Administrator for Innovation and Quality, CMS will be reorienting and aligning measures around patient-centered outcomes (40). Readmission rates for certain disorders are already part of CMS’ formula for reimbursement adjustments based on readmissions for COPD will begin in 2014. It is unclear at this juncture if other traditional outcome measures such as mortality, morbidiy, hospital length of stay and cost will also be considered. These would likely be an improvement over the “value-based performance” measures, many of which either do not or inversely correlate with outcomes.

The explosion in the number of groups attempting to improve quality and safety raises the question of how should target practices be selected. It is unclear if private organizations be setting a national agenda for change. The 100,000 Lives Campaign allowed IHI to receive credit for many things that would have happened anyway (14). The campaign created a landslide of “brand recognition” for IHI, and undoubtedly led to substantial new revenues and philanthropic dollars. A conflict (or, at very least, appearance of conflict) is unavoidable. A federal agency or regulator would not be vulnerable to such concerns.

Professional organizations need to do their part in improving the quality of medical care. Many, if not most, professional organizations have rushed to publish guidelines. Unfortunately, the evidence basis for these guidelines have been little better than the IHI, Joint Commission or IOM’s recommendations. Lee and Vielemeyer (41) found that only 14% of the Infectious Disease Society of America (IDSA) guidelines are based on level I evidence (data from >1 properly randomized controlled trial). Much of this 14% and the 86% that are below level I evidence will eventually be proven wrong (42,43). It is doubtful that other medical societies are performing much better.

Medical journals also need to do their part. Reviewers and editors need to evaluate manuscripts regarding “quality medical care” with the same scientific skepticism applied to other articles. Randomized trials should not only be applied to diagnostic and therapeutic interventions but just as vigorously applied to formulation and implementation of guidelines and other interventions designed to improve medical care quality. Too often interventions based on either weak or no evidence become ingrained in medical practice when papers with questionable methods and/or outcomes are published. Journals should not allow authors to call an intervention as improving quality of medical care without definition of quality and without accompanying demonstration of an improvement in patient-centered outcomes.

Lastly, physicians need to do their part. Physicians should reevaluate their participation and financial support of medical societies that author or support non- or weakly evidenced guidelines. They should oppose quality programs introduced into medical practice that are not based on level I evidence (at least one randomized trial). That the IHI was able to introduce a program such as the 100,000 Lives Campaign into hospital practice based on weak or non-evidence based interventions such as rapid response teams, central-line insertions guidelines and ventilator-associated guidelines is disturbing. That the IHI was able to declare that implementation of their interventions saved 123,000 lives based on the sloppy collection of self-reported data is equally disturbing. That these interventions persist to this day is perhaps most disturbing of all. As taught by Flexner nearly a century ago, only through application of scientific principles and vigorous review of interventions will the quality of medical care improve.

References

- John Maindonald J, Richardson AM. This passionate study: a dialogue with Florence Nightingale. Journal of Statistics Education 2004: 12(1). Available at: www.amstat.org/publications/jse/v12n1/maindonald.html (accessed 12/5/13).

- Flexner A. Medical Education in the United States and Canada: A Report to the Carnegie Foundation for the Advancement of Teaching. Bulletin 4. 1910. New York: Carnegie Foundation.

- Lembcke PA. Evolution of the medical audit. JAMA. 1967;199(8):543-50. [CrossRef] [PubMed]

- Borus ME, Buntz CG, Tash WR. Evaluating the Impact of Health Programs: A Primer. 1982. Cambridge, MA: MIT Press.

- Baker F. Quality assurance and program evaluation. Eval Health Prof. 1983;6(2):149-60. [PubMed]

- Donabedian A. Evaluating the quality of medical care. 1966. Milbank Q. 2005;83(4):691-729. [PubMed]

- Affeldt JE. The new quality assurance standard of the Joint Commission on Accreditation of Hospitals. West J Med. 1980;132:166-70. [PubMed]

- Affeldt JE, Roberts JS, Walczak RM. Quality assurance. Its origin, status, and future direction--a JCAH perspective. Eval Health Prof. 1983;6(2):245-55. [PubMed]

- Institute for Healthcare Improvement Open School. US Directory. Available at: http://www.ihi.org/offerings/IHIOpenSchool/Chapters/Pages/ChapterDirectoryUnitedStates.aspx (accessed 12/7/13).

- Hillman K, Chen J, Cretikos M, Bellomo R, Brown D, Doig G, Finfer S, Flabouris A; MERIT study investigators. Introduction of the medical emergency team (MET) system: a cluster-randomised controlled trial. Lancet. 2005;365(9477):2091-7. [PubMed]

- Hurley J, Garciaorr R, Luedy H, Jivcu C, Wissa E, Jewell J, Whiting T, Gerkin R, Singarajah CU, Robbins RA. Correlation of compliance with central line associated blood stream infection guidelines and outcomes: a review of the evidence. Southwest J Pulm Crit Care 2012;4:163-73.

- Padrnos L, Bui T, Pattee JJ, Whitmore EJ, Iqbal M, Lee S, Singarajah CU, Robbins RA. Analysis of overall level of evidence behind the Institute of Healthcare Improvement ventilator-associated pneumonia guidelines. Southwest J Pulm Crit Care 2011;3:40-8.

- Institute for Healthcare Improvement. IHI announces that hospitals participating in 100,000 lives campaign have saved an estimated 122,300 lives. Available at: http://www.ihi.org/about/news/documents/ihipressrelease_hospitalsin100000livescampaignhavesaved122300lives_jun06.pdf (accessed 12/7/13).

- Wachter RM, Pronovost PJ. The 100,000 Lives Campaign: A scientific and policy review. Jt Comm J Qual Patient Saf. 2006;32(11):621-7. [PubMed]

- Institute for Healthcare Improvement. 5 million lives campaign. Available at: http://www.ihi.org/about/Documents/5MillionLivesCampaignCaseStatement.pdf (accessed 12/7/13).

- DerGurahian J. IHI unsure about impact of 5 Million campaign. Available at: http://www.modernhealthcare.com/article/20081210/NEWS/312109976 (accessed 12/7/13).

- Ken Kizer transforming the VA. Available at: http://www.medsphere.com/transforming-the-va (accessed 12/19/13).

- Robbins RA. Profiles in medical courage: of mice, maggots and Steve Klotz. Southwest J Pulm Crit Care. 2012;4:71-7.

- Jha AK, Perlin JB, Kizer KW, Dudley RA. Effect of the transformation of the Veterans Affairs Health Care System on the quality of care. N Engl J Med. 2003;348(22):2218-27 [CrossRef] [PubMed]

- Robbins RA, Klotz SA. Quality of care in US hospitals. N Engl J Med 2005; 353(17):1860-1861 [CrossRef] [PubMed]

- Perlin JB. Prevention in the 21st century: using advanced technology and care models to move from the hospital and clinic to the community and caring. Presented at the First Annual VA Preventive Medicine Training Conference, Albuquerque, NM , August 11, 2003. For a copy of the slide presentation click here.

- Nichol KL, Baken L, Wuorenma J, Nelson A. The health and economic benefits associated with pneumococcal vaccination of elderly persons with chronic lung disease. Arch Intern Med. 1999;159(20):2437-42. [CrossRef] [PubMed]

- Fine MJ, Smith MA, Carson CA, Meffe F, Sankey SS, Weissfeld LA, Detsky AS, Kapoor WN. Efficacy of pneumococcal vaccination in adults. A meta-analysis of randomized controlled trials. Arch Int Med 1994;154:2666-77. [CrossRef] [PubMed]

- Dear K, Holden J, Andrews R, Tatham D. Vaccines for preventing pneumococcal infection in adults. Cochrane Database Sys Rev 2003:CD000422. [PubMed]

- Huss A, Scott P, Stuck AE, Trotter C, Egger M. Efficacy of pneumococcal vaccination in adults: a meta-analysis. CMAJ 2009;180:48-58. [CrossRef] [PubMed]

- Robbins RA. August 2013 pulmonary journal club: pneumococcal vaccine déjà vu. Southwest J Pulm Crit Care. 2013;7(2):131-4.[CrossRef]

- Institute of Medicine. Available at: http://www.iom.edu/About-IOM.aspx (accessed 12/20/13).

- Kohn LT, Corrigan J, Donaldson MS, Institute of Medicine (U.S.) Committee on Quality of Health Care in America. To Err Is Human: Building a Safer Health System. Washington, DC: National Academy Pr; 1999.

- Brennan TA, Leape LL, Laird NM, Hebert L, Localio AR, Lawthers AG, Newhouse JP, Weiler PC, Hiatt HH. Incidence of adverse events and negligence in hospitalized patients. Results of the Harvard Medical Practice Study I. N Engl J Med. 1991;324(6):370-6. [CrossRef] [PubMed]

- Thomas EJ, Studdert DM, Burstin HR, Orav EJ, Zeena T, Williams EJ, Howard KM, Weiler PC, Brennan TA. Incidence and types of adverse events and negligent care in Utah and Colorado. Med Care. 2000;38(3):261-71. [CrossRef] [PubMed]

- Hofer TP, Kerr EA, Hayward RA. What is an error? Eff Clin Pract. 2000;3(6):261-9. [PubMed]

- Leape LL, Berwick DM, Bates DW. What practices will most improve safety? Evidence-based medicine meets patient safety. JAMA. 2002;288(4):501-7. [CrossRef] [PubMed]

- Dentali F, Douketis JD, Gianni M, Lim W, Crowther MA. Meta-analysis: anticoagulant prophylaxis to prevent symptomatic venous thromboembolism in hospitalized medical patients. Ann Intern Med. 2007;146(4):278-88. [CrossRef] [PubMed]

- Marik PE, Vasu T, Hirani A, Pachinburavan M. Stress ulcer prophylaxis in the new millennium: a systematic review and meta-analysis. Crit Care Med. 2010;38(11):2222-8. [CrossRef] [PubMed]

- AHRQ Patient Safety Project Reduces Bloodstream Infections by 40 Percent. September 2012. Agency for Healthcare Research and Quality, Rockville, MD. Available at: http://www.ahrq.gov/news/newsroom/press-releases/2012/20120910.html (accessed 12/23/13).

- Central Line Insertion Care Team Checklist. May 2009. Agency for Healthcare Research and Quality, Rockville, MD. Available at: http://www.ahrq.gov/professionals/quality-patient-safety/patient-safety-resources/resources/cli-checklist.html (accessed 12/23/13).

- Meddings JA, Reichert H, Rogers MA, Saint S, Stephansky J, McMahon LF. Effect of nonpayment for hospital-acquired, catheter-associated urinary tract infection: a statewide analysis. Ann Intern Med. 2012;157:305-12. [CrossRef] [PubMed]

- Robbins RA, Gerkin RD. Comparisons between Medicare mortality, morbidity, readmission and complications. Southwest J Pulm Crit Care. 2013;6(6):278-86.

- National Guideline Clearinghouse. Agency for Healthcare Research and Quality, Rockville, MD. Available at: http://www.guideline.gov/browse/by-topic.aspx (accessed 12/23/13).

- Conway PH, Mostashari F, Clancy C.The future of quality measurement for improvement and accountability. JAMA. 2013;309(21):2215-6. [CrossRef] [PubMed]

- Lee DH, Vielemeyer O. Analysis of overall level of evidence behind infectious diseases society of America practice guidelines. Arch Intern Med. 2011;171:18-22. [CrossRef] [PubMed]

- Prasad V, Vandross A, Toomey C, et al. A decade of reversal: an analysis of 146 contradicted medical practices. Mayo Clin Proc. 2013;88(8):790-8. [CrossRef] [PubMed]

- Villas Boas PJ, Spagnuolo RS, Kamegasawa A, et al. Systematic reviews showed insufficient evidence for clinical practice in 2004: what about in 2011? The next appeal for the evidence-based medicine age. J Eval Clin Pract. 2013;19(4):633-7. [CrossRef] [PubMed]

Reference as: Robbins RA. The unfulfilled promise of the quality movement. Southwest J Pulm Crit Care. 2014;8(1):50-63. doi: http://dx.doi.org/10.13175/swjpcc181-13 PDF

Reader Comments